As artificial intelligence aficionados, it is always exciting to see a new piece of AI technology that stands out from the crowd. Flooding social media timelines, ChatGPT has been a trending topic when it crossed 1 million users in less than a week after launch. Since then, thousands of users are sharing their use cases of how ChatGPT can answer questions, write short articles on specific topics, debug code, write song lyrics and poems, summarize essays, and even do their homework for them.

There is a reason for the hype, ChatGPT is developed on a large language model (LLM) and answers pretty much anything you ask. Large Language Models (LLMs) are artificial intelligence tools that can read, summarize and translate texts that predict future words in a sentence. This allows the tech to generate sentences eerily similar to how humans write and talk. Although ChatGPT is not connected to the internet, it is trained on an enormous amount of historical data (pre-2021) and presents its findings in an easily consumable way.

With its limited capabilities, it may help you in multiple day-to-day activities but does it make a solid replacement for conversational AI technology? The simple answer is a big NO.

Although large language models like ChatGPT are amazingly developed, pre-trained models that leverage AI in an exciting way, the limitations for making it a robust, intelligent virtual assistant are … substantial. Let’s take a deeper dive into the differences between these two pieces of technology and how businesses can use them to complement each other.

The Gap Between ChatGPT and Conversational AI

While ChatGPT and conversational AI platforms share some similarities, they are designed to serve different purposes and are not directly interchangeable. Conversational AI platforms construct and deploy chatbots and virtual assistants that allow users to interact with them through natural language conversations in order to provide a solution. These platforms typically include a range of tools and features that are necessary for any virtual assistant to function effectively from building, designing and deploying a bot to including bot life cycle management, natural language processing (NLP) algorithms, machine learning models, and enterprise integrations.

In contrast, ChatGPT is a specific machine learning model that is designed to generate human-like text based on a given prompt or context.

While ChatGPT can be used to generate responses that might be used by a chatbot or virtual assistant, it does not have enough features to be able to replace a conversational AI platform on its own.

Key Limitations of ChatGPT as an Intelligent Virtual Assistant:

-

Lacks Integrations for Transactional Tasks ChatGPT is advantageous when it comes to responding with pre-trained knowledge, but most customers will need to interact with the Agent for more complex transactions that require integrations between their back-end systems.

Some examples of tasks that a virtual assistant built using a conversational AI platform are:

-

- Place and Process Orders

- Check Order and Delivery Status

- Report Problems and Issues

- Report Fraud

- Inquire about Events etc.

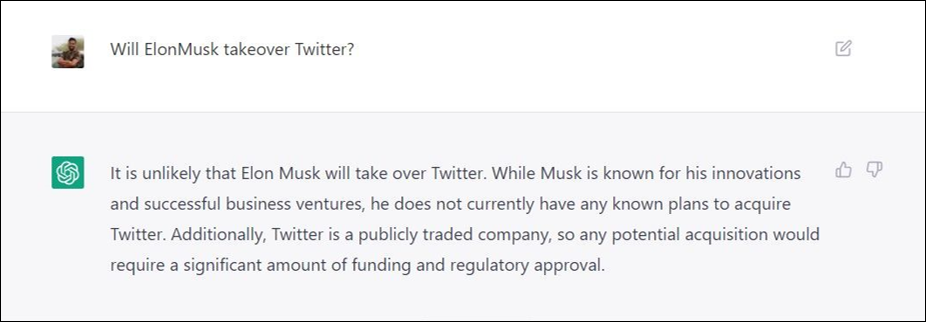

- Does not provide factually correct information LLM and ChatGPT are natural language generation models that are designed to generate human-like text based on a given prompt or context. While these models can generate responses that might be appropriate in certain contexts, there is no way to guarantee that their responses are always factually correct.

LLMs and ChatGPT are machine learning models developed to create text from large datasets but lack access to external knowledge or an understanding of the real world, which makes their generated output often limited. As a result, they may generate responses that are based on incorrect, outdated, or inappropriate information for the context in which they are used.

OpenAI confirms that ChatGPT “sometimes writes plausible-sounding but incorrect or nonsensical answers.” This could lead to unhappy customers and legal issues for an enterprise. By leveraging the power of conversational AI, every response a virtual assistant gives can be tailored by an experienced copywriter to ensure users enjoy seamless and precise conversations.

- Can’t solve enterprise specific problems While these models can generate responses that might be appropriate in certain contexts, they are not able to handle organization-specific queries or FAQs on their own. For this specificity to occur, you will need a chatbot or virtual assistant, like those powered by conversational AI, that can be trained on specific datasets and is able to link within your organization’s current systems and processes to build appropriate questions and replies for customers.

- Not an option for OnPrem deployments Hosting the LLM or GPT-3 model in an on-premises environment can be challenging due to the model’s size and complexity and would require the necessary hardware and infrastructure in place to support it onsite. This may include a powerful server or cluster of servers with a high-speed network connection and sufficient memory and storage to support the model’s operations. In addition to the hardware and infrastructure requirements, a technician with the right expertise and resources to install, configure, and manage the GPT-3 model would need to be available for your on-premises environment.

- Data privacy and security is a concern

Virtual assistants are designed to manage a substantial amount of confidential information, and many conversational AI platforms prioritize security that features the right tools to guarantee it. However, there are several security considerations when it comes to LLMs and ChatGPT, like:

-

- Collection and use of personal data: LLMs and other NLP models may process and analyze personal data, such as names, addresses, and other identifying information, as part of their operations. It is important to ensure that this personal data is collected and used in a way that is compliant with relevant data protection laws and regulations, such as the General Data Protection Regulation (GDPR) in the European Union.

- Security and confidentiality: LLMs and other NLP models may be used to process sensitive or confidential data, such as financial or medical information. It is important to ensure that appropriate security measures are in place to protect this data from unauthorized access or disclosure.

- Data storage and retention: LLMs and other NLP models may generate or store large amounts of data as part of their operations. It is important to have a clear and transparent data retention policy in place, and to ensure that data is stored and handled in a way that is compliant with relevant data protection laws and regulations.

- Data sharing and access: LLMs and other NLP models may be used to process data that is shared with or accessible by third parties, such as service providers or partners. It is important to ensure that appropriate safeguards are in place to protect this data from unauthorized access or disclosure like the Kore.ai XO Platform.

How ChatGPT Compliments Conversational AI Platforms like Kore.ai XO Platform

By integrating with aspects of LLMs, Kore.ai is able to maximize its potential in various areas and expedite bot development. To illustrate, some of these features include:

- Knowledge AI: Integration with models like Open AI makes it possible to identify and auto-generate answers. It provides the ability to automatically answer FAQs from PDF documents without needing to extract them or train the Knowledge Graph.

- Automatic Intent Recognition: Virtual assistants can automatically identify the right intent by understanding the language and semantic meaning of the utterance using pre-trained large language models. You do not have to provide any training utterances.

- Generate Better Test Data: Using large language models, we are able to auto-generate large amounts of test data in a fraction of the time that will continue to improve human-bot interactions.

- Slot and Entity Identification: We can leverage LLMs to speed up dialog development by auto generating slots and entities for specific usecases. This allows for faster time for a developer to review and then simply wire the integration with their backend per enterprise business rules.

- Generate Prompts and Messages: During training, LLMs can be used to save a massive amount of time by generating initial prompts and messages that will potentially be presented to the end user. Instead of writing everything from scratch, these human-like messages can simply be edited by copywriters to ensure that enterprise specific standards are being followed in responses.

- Build Sample Conversations: LLMs can generate whole sample conversations between the bot and a user for any given use case which creates an excellent starting point for Conversation Designers to fine tune responses.

ChatGPT Only Solves Part of the Conversational AI Question

ChatGPT, like other LLMs, falls short when it comes to providing the features and capabilities necessary for enterprise-grade users. Conversational AI platforms, like XO Platform, allow businesses and organizations to maintain an end-to-end lifecycle including designing, training, testing and deploying IVAs that can interact with customers and users in a natural, human-like way. These platforms typically provide tools and frameworks for designing, building, and deploying chatbots, as well as APIs and other integration options for connecting the chatbots to various communication channels, such as websites, messaging apps, and voice assistants.

At Kore.ai, we spent significant time exploring the exciting possibilities of ChatGPT in the enterprise context and realize that the impact is not substantial enough to be compared to more robust conversational AI solutions like ours.

Overall, ChatGPT is a powerful tool that can be used to improve the quality and effectiveness of chatbot conversations, but it is just one piece of the puzzle when it comes to building and deploying a successful conversational AI platform. For those looking to develop witty comebacks or test pop culture references, LLMs are the perfect option. But at the end of the day, customers expect swift and effective problem-solving – and that’s why AI-driven virtual assistants stand out as truly intelligent customer service solutions.

Grab a chance to try the industry-leading Kore.ai XO Platform for free!