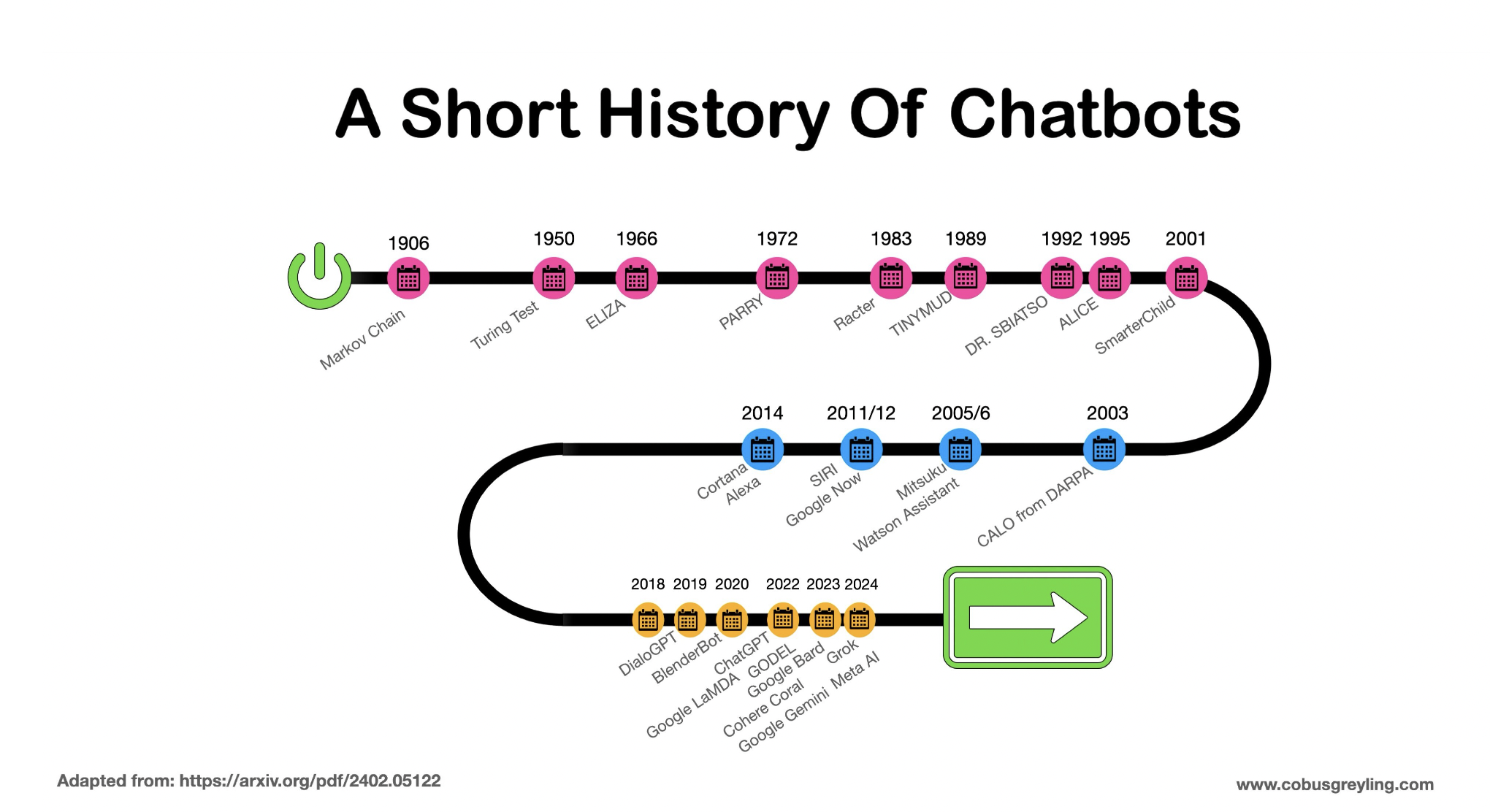

The evolution of chatbot technology has been remarkable, progressing from rudimentary systems dependent on rules to today’s sophisticated conversational bots driven by artificial intelligence.

Introduction

Initially, chatbots operated on a template-based approach, relying on rigidly defined rules and hardcoded logic to navigate conversations.

These early systems employed static text responses, with limited variability, and incorporated variables to adapt to user inputs.

Natural Language Understanding (NLU) capabilities were rudimentary, allowing for basic comprehension of user queries.

However, as technology progressed, chatbots evolved beyond these constraints. They began to incorporate machine learning algorithms and advanced NLU models, enabling them to understand context, infer user intent, and generate dynamic responses. This shift marked the transition from rule-based systems to more intelligent and adaptable conversational agents.

Pattern Recognition

In the early stages of chatbot development, pattern recognition served as a fundamental component in enhancing their functionality. Early chatbots relied on simplistic pattern matching techniques to interpret user inputs and generate appropriate responses.

These systems employed rule-based algorithms to identify recurring patterns in user queries and map them to predefined responses. While effective for handling basic interactions, such as FAQs, this approach had limitations in understanding nuanced language, context, digression, user utterances with multiple intents and disambiguation.

Despite its constraints, pattern recognition laid the groundwork for more advanced AI technologies in chatbots.

It provided a foundation for understanding user intent and guided the evolution towards machine learning-based models capable of dynamic conversation and context-aware responses.

As chatbot technology continues to advance, pattern recognition remains an essential element, albeit within a broader framework of sophisticated algorithms and neural networks.

Chatbot Vulnerabilities

While pattern matching with templates laid the early foundations of chatbot development, this approach has notable limitations.

Responses generated through pattern matching tend to be

- Predictable,

- Repetitive, &

- Lack the nuanced human touch necessary for engaging conversations.

Managing conversational context and often without retention of past responses, there’s a risk of repetitive and looping dialogues.

These drawbacks underscored the need for advancements in chatbot technology to overcome these limitations.

Modern chatbots employ more sophisticated algorithms, including natural language processing and machine learning, to deliver dynamic and contextually relevant interactions, thus addressing the shortcomings of early template-based approaches.

In the next section I’m recapping some thoughts I wrote about earlier in the week…

LLM Disruption of Chatbot Development Frameworks

The graphic below shows the different components and attributes comprising a Large Language Model (LLM). The hurdle lies in effectively accessing these features at the right moments, guaranteeing stability, predictability, and, to some degree, reproducibility.

Numerous organisations and technology providers are currently manoeuvring through the shift from Traditional Chatbots to integrating Large Language Models, with results varying across the board.

✨Traditional Chatbot IDEs

Traditionally, chatbots have primarily comprised four basic elements.

However, in recent times, there have been numerous efforts to rethink this structure.

The primary objective has been to alleviate the rigidity associated with hard-coded and fixed architectural elements, thereby fostering greater flexibility and adaptability within chatbot systems.

✨Natural Language Understanding (NLU)

Within the chatbot framework, the Natural Language Understanding (NLU) engine stands as the sole “AI” component, responsible for detecting intents and entities from user input.

Accompanying this engine is a Graphical User Interface (GUI) designed to facilitate the definition of training data and the management of the model.

Together, these elements form the core infrastructure for empowering the chatbot to comprehend and respond intelligently to user interactions.

Typically advantages of NLU engines are:

✦ Numerous open-sourced models.

✦ NLU engines have a small footprint/ not resource intensive; local & edge installations are feasible.

✦ No-Code UIs.

✦ NLU’s have been around for so long, large corpus of named entities exist

✦ Predefined entities & training data for specific verticals, like banking, help desks, HR, etc.

✦ Rapid model training & in a production environment, models can be trained multiple times per day.

✦ Creating NLU training data was one of the areas where LLMs were introduced the first time.

✦ LLMs were used to generate training data for the NLU model based on existing conversations and sample training data.

✨Conversation Flow & Dialog Management

The dialog flow & logic are designed & built within a no-code to low-code GUI.

The flow and logic is basically a predefined flow with predefined logic points. The conversation flows according to the input data matching certain criteria of the logic gate.

There has been efforts to introduce flexibility to the flow for some semblance of intelligence.

✨Message Abstraction Layer

The message abstraction layer holds predefined bot responses for each dialog turn. These responses are fixed, and in some cases a template is used to insert data and create personalised messages.

Managing the messages proves to be a challenge especially when the chatbot application grows, and due to the static nature of the messages, the total number of messages can be significant. Introducing multilingual chatbots adds considerable complexity.

Whenever the tone or persona of the chatbot needs to change, all of these messages need to be revisited and updated.

This is also one of the areas where LLMs were introduced the first time to leverage the power of Natural Language Generation (NLG) within LLMs.

✨ Out-Of-Domain

Out-Of-Domain questions are handled by knowledge bases & semantic similarity searches. Knowledge bases were primarily used for QnA and the solutions made use of semantic search. In many regards this could be considered as an early version of RAG.

Conclusion

There is been much talk lately of Generative AI slop; AI-generated slop that is seen as only clogging the arteries of the web. And hence slop is really unwanted generated content.

And on the contrary…conversational AI enabled by Generative AI is poised to become even more integral to our daily lives, seamlessly blending into various aspects of communication and interaction.

With advancements in natural language understanding and generation, conversational AI will offer more personalised and contextually relevantresponses within conversations people want to have.

Additionally, I anticipate greater integration of conversational AI in sectors such as healthcare, education, and customer service, revolutionising how we access information and services.

Originally published in Medium.