In this article I put into plain terms the difference between chains and agents, and what will work best for certain applications.

Introduction

With the advent of Large Language Models (LLMs) there was a need to string inference queries together to create a longer application. This allows for a sequence of events to solve for more complex user queries. Or in instances where a user wants to have a longer conversation and these multiple dialog turns need to be managed.

Hence a whole host of flow engineering applications were built to accommodate the chaining of prompts, as seen below:

There a’re two notable movements in the flow engineering tooling landscape. The first being traditional chatbot development frameworks (IDEs) which included chaining functionality into their flow builders.

The second movement in the flow engineering IDE landscape was new entrants into the market.

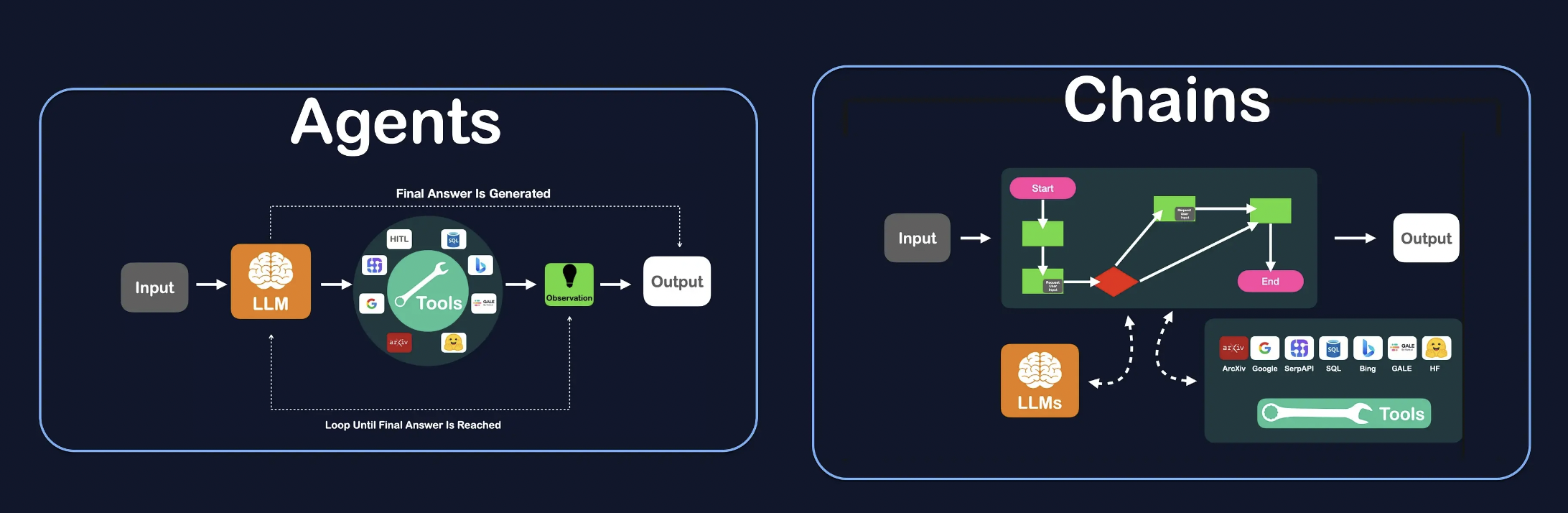

Chains

Prompt chaining is a technique used in prompt-based AI systems, where one prompt generates or influences another prompt in a sequence to achieve a specific outcome or task. Essentially, it’s a method of stringing together multiple prompts to guide the AI model towards a desired response or behaviour.

Some of the nodes in the chain can ask for user input at certain points, hence acting as a conversational UI.

For example, in the context of language generation, you might start with an initial prompt to introduce a topic or scenario. Then, based on the generated response from the model, you feed that response back to the LLM as the next prompt to further develop the conversation or refine the output.

It needs to be stated that this process consists of a hard-coded sequence of events with decision points along the way. This approach is tantamount to a state machine.

Agents

Somehow it feels to me that it is currently overlooked, but Autonomous AI Agents represent a pivotal advancement in technology.

Agents, equipped with artificial intelligence, have the capacity to:

- Operate independently,

- Make decisions &

- Take actions without constant human intervention.

In the future, autonomous AI agents are set to revolutionise industries ranging from healthcare and finance to manufacturing and transportation.

There are however considerations regarding accountability, transparency, ethics and responsibility in decision-making.

Despite these challenges, the future with autonomous AI agents hold tremendous promise. As technology continues to evolve, these agents will become increasingly integrated into our daily lives.

Agents & Chains

Considering the table below, a comparison drawn between autonomous agents & prompt chaining.

Flexibility / Autonomy / Reasoning

Agents do have a greater level of flexibility, and real autonomy and the ability to reason and the ability to select tools as and when it is required in a sequence as the agent deems fit.

With prompt chaining, users have direct control over the direction of the conversation or task. By providing prompts at each step, users can guide the AI model to produce responses that align with their goals. This level of control is particularly valuable in applications where specific outcomes or behaviours are desired.

Granular State Based

Prompt chaining can be used as a technique for having a more granular and state based approach to applications. Chains also allow for iterative adjusting of prompts based on the flow’s performance, makers can iterate and improve the flows toward producing more accurate and relevant outputs over time. This iterative improvement process enhances the effectiveness and utility of the AI system.

This granular nature of chains allows for conversation designers to still play an important role in crafting compelling conversational experiences which improves over time.

RPA Approach

Prompt chaining can be utilised in Robotic Process Automation (RPA) implementations. In the context of RPA, prompt chaining can involve a series of prompts given to an AI model or a bot to guide it through the steps of a particular task or process automation.

By incorporating prompt chaining into RPA implementations, organisations can enhance the effectiveness, adaptability, and transparency of their automation efforts, ultimately improving efficiency and reducing operational costs.

Human In The Loop

Prompt chaining is ideal for involving humans and by default chains are a dialog turn based conversational UI where the dialog or flow is moved forward based on user input.

There are instances where chains do not depend on user input, and these implementations are normally referred to as prompt pipelines.

Agents can also have a tool for human interaction, the HITL tool are ideal when the agent reaches a point where existing tools do not suffice for the query, and then the Human-In-The-Loop Tool can be used to reach out to a human for input.

Managing Cost

Managing costs is more feasible with a chained approach compared to an agent approach. One method to mitigate cost barriers is by self-hosting the core LLM infrastructure, reducing the significance of the number of requests made to the LLM in terms of cost.

Optimising Latency

Optimising latency through self-hosted local LLMs involve hosting the language model infrastructure locally, which reduces the time it takes for data to travel between the user’s system and the model. This localisation minimises network latency, resulting in faster response times and improved overall performance.

LLM Choose Action Sequence

LLMs can choose action sequences for agents by employing a sequence generation mechanism. This involves the LLM generating a series of actions based on the input provided to it. These actions can be determined through a variety of methods such as reinforcement learning, supervised learning, or rule-based approaches.

Seamless Tool Introduction

With autonomous agents, agent tools can be introduced seamlessly to update and enhance the agent capabilities.

Design Canvas Approach

A prompt chaining design canvas IDE (Integrated Development Environment) would provide a visual interface for creating, editing, and managing prompt chains. Here’s a conceptual outline of what features such an IDE might include: Visual Prompt Editor, Prompt Library, Connection Management, Variable Management, Preview and Testing, etc.

Overall, a prompt chaining design canvas IDE would provide a user-friendly environment for designing, implementing, and managing complex conversational flows using a visual, intuitive interface.

No/Low-Code IDEs

Agents are typically pro-code in their development where chains mostly follows a design canvas approach.

Agents often involve a pro-code development approach, where developers write and customise code to define the behaviour and functionality of the agents. Conversely, chains typically follow a design canvas approach, where users design workflows or sequences of actions visually using a graphical interface or canvas. This visual approach simplifies the creation and modification of processes, making it more accessible to users without extensive coding expertise.

I need to add that there are agent IDEs like FlowiseAI, LangFlow, Stack and others.

Previously published on Medium.